A recent report from the Public Policy Institute reveals that the majority of California’s public school parents are uninformed about the new tests their children took this past year. And despite numerous concerns regarding the lack of validity, technological barriers, biases, and test administration problems, “test scores” soon will be released to the public.

A recent report from the Public Policy Institute reveals that the majority of California’s public school parents are uninformed about the new tests their children took this past year. And despite numerous concerns regarding the lack of validity, technological barriers, biases, and test administration problems, “test scores” soon will be released to the public.

The following includes adapted selections of a letter I sent to the California State Board of Education for the July 2015 State Board of Education meeting. My purpose in sharing this information is to draw attention to the lack of scientific validity of the test scores that are soon to be released to the public and to promote critical thinking about issues of fairness, accessibility, data security, and standardization in the test administrations.

It is important to consider that unless assessments are independently verified to adhere to basic standards of test development regarding validity, reliability, security, accessibility, and fairness in administration, resulting scores will be meaningless and should not be used to make claims about student learning, progress, aptitude, nor readiness for college or career (see Legal Implications of High Stakes Assessments: What States Should Know).

QUESTIONS:

Q1: How is standardization to be assumed when students are taking tests on different technological tools with vastly varying screen interfaces? Depending on the technology used (desktops, laptops, chromebooks, and/or ipads), students would need different skills in typing, touch screen navigation, and familiarity with the tool.

Q2: How are standardization and fairness to be assumed when students are responding to different sets of questions based on how they answer (or guess) on the adaptive sections of the assessments?

Q3: How is fairness to be assumed when large proportions of students do not have access at home to the technology tools that they are being tested on in schools? Furthermore, how can fairness be assumed when some school districts do not have the same technology resources as others for test administration?

Q4: How/why would assessments that had already been flagged with so many serious design flaws and user interface problems continue to be administered to millions of children without changes and improvements to the interface? (See report below)

Q5: How can test security be assumed when tests are being administered across a span of over three months and when login features allow for some students to view a problem, log off, go home (potentially research and develop an answer) and then come back and log in and take the same section? (This process was reported from a test proctor who observed the login, viewing and re-login process).

Q6: Given serious issues in accessibility and the fact that the assessments have yet to be independently validated, how/why would the SmarterBalanced Assessment Consortium solicit agreements from nearly 200 colleges and universities to use 2015 11th Grade SBAC data to determine student access to the regular curriculum or to “remedial” courses? http://blogs.edweek.org/edweek/curriculum/2015/04/sbac.html.

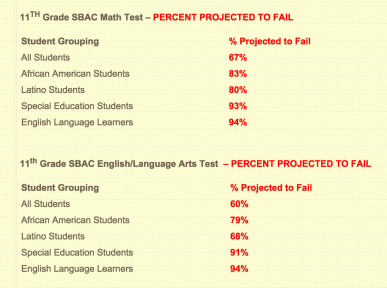

Projected failure rates disproportionately impact youth of color, students with special needs, and English Language Learners, cut scores have yet to be validated, and the use of the test scores may be argued to contribute to systemic barriers that already limit access to higher education for students from historically underserved populations.

[Chart data from SBAC / Screenshot from post on J.Pelto’s Wait, What blog]

Q7: [Background] I participated in the cut-score setting process during October of 2014 as I had learned through a public announcement on social media about the opportunity to take part. According to their own documents, SBAC confirms that 7% of their feedback about the cut score setting was from the “general public.” “Smarter Balanced is inviting anyone who’s interested—you don’t have to be a teacher or even work in education—to register to participate in the “standard-setting” process.”

It was apparent by the lack of screening that as long an individual had a pulse and an email address, any member of the public who so wanted was given open access to at least 30 test items and answers considered for use in the assessments. Despite signing an electronic statement promising not to share any information from the activity, anyone (including test-prep profiteers) could have downloaded within a matter of minutes dozens of test items developed for use by the consortium. It is appalling that such an epic breach of security would be allowed in the process of test development.

Q7: Given the open access of hundreds of test-items to large numbers of unscreened participants who took part in the public cut-score setting procedures, how can the State Board of Education ensure the security of test items used by the SmarterBalanced Consortium?

[Additional note (not included in original letter)]

One might also consider issues of students sharing test-related topics and posts on social media as potential security breaches of content. With a three month testing span and countless new apps for anonymous sharing, the possibilities are endless. Still, testing companies seem laser-focused on following students’ visible social media feeds with controversial surveillance strategies that have led to serious privacy concerns (here, here, and here) in states with the PARCC versions of CCSS tests administered by Pearson. While public awareness and concern has focused primarily on Pearson’s activities, the SmarterBalanced Assessment Consortium has also been critiqued for its policies on student monitoring. As we consider issues of test security, we should acknowledge the thousands of potential, alternate, creative hashtags or other communication tools that youth may use aside from the obvious #PARCC #SBAC and #SmarterBalanced tags to discuss their experiences with the assessments. Let’s take a look at how [in]effective it was for the College Board to require sworn statements from test-takers to refrain from posting about the PSAT on social media:

Q8: Will the California State Board of Education hold the SmarterBalanced Assessment Consortium member states and test developers accountable to adhere to the basic terms, timelines, conditions, and agreements described in the Race to the Top Assessment Development Proposal? http://www.edweek.org/media/sbac_final_narrative_20100620_4pm.pdf [Note: Pages 47-50 offer detailed descriptions of intended features of the assessments and proposed supports for implementation. Readers are encouraged to compare and contrast these descriptions with what has actually been developed (evidence below).]

Q9: Since data gathering for the development of the SmarterBalanced Assessments has been conducted through a Federal Research Grant, and the Public Policy Institute has determined that the majority of parents have been uninformed about the new tests, how can the State Department of Education and/or SmarterBalanced Consortium ensure that Basic Protections of Human Subjects were upheld during the pilot and field test administrations over the past two years? http://www2.ed.gov/about/offices/list/ocfo/humansub.html.

Is it the responsibility of the SmarterBalanced Testing Consortium, the Federal Government, or State/District Board of Education Trustees to ensure that student and parental rights and ethical protections of Human Subjects were not violated in the process of developing the new tests?

Q10 (Background): As you are aware, ETS (Educational Testing Services) has been provided the multi-million dollar contract to administer and score the tests in California http://www.kcra.com/news/whos-grading-your-kids-assessment-test-in-california/31857614. According to the following article “ETS has lobbied against legislation to require agencies to “immediately initiate an investigation” after complaints on “inadequate” testing conditions. It also lobbied against a bill designed to safeguard pupil data in subcontracting.” http://www.washingtonpost.com/blogs/answer-sheet/wp/2015/03/30/report-big-education-firms-spend-millions-lobbying-for-pro-testing-policies/

Q10a: How will the State Board of Education ensure the student data being provided to 3rd party entities (unbeknownst to many parents and students) would be secure?

Q10b: What are the responsibilities of the State and Districts to inform parents of how their children’s data may be used by 3rd party entities should they choose to take part in the testing?

Evidence of Testing Barriers and Implementation Problems

The Board is encouraged to consider the following evidence documenting serious concerns regarding the validity, reliability, security, accessibility, and fairness of the SmarterBalanced Assessments.

SmarterBalanced Mathematics Tests Are Fatally Flawed and Should Not Be Used documents serious user-interface barriers and design flaws in the SmarterBalanced Mathematics assessments. According to the analyses, the tests:

- “Violate the standards they are supposed to assess;

- Cannot be adequately answered by students with the technology they are required to use;

- Use confusing and hard-to-use interfaces; or

- Are to be graded in such a way that incorrect answers are identified as correct and correct answers as incorrect.

No tests that are so flawed should be given to anyone. Certainly, with stakes so high for students and their teachers, these Smarter Balanced tests should not be administered. The boycotts of these tests by parents and some school districts are justified. Responsible government bodies should withdraw the tests from use before they do damage. Read the full report…“

Rasmussen notes that the numerous design flaws and interface barriers had been brought to the attention of the SmarterBalanced Assessment Consortium during the Spring 2014 pilot test and remained unresolved during the Spring 2015 test administration.

There are also misrepresentations of technology interface features in public awareness campaigns to inform parents and students about the new tests. According a video on the BeALearningHero.org website, fractions spontaneously appear [in fraction form] on the screen as a visual feature of the new assessments:

However, the actual screens that students encounter on the tests are completely different than what is shown above and the process of entering even one fraction into the text space provided is problematic.

Why are the actual screen representations of the tests not provided in the public education campaigns?

Further evidence of testing problems are included in the following clip with a selected portion of the transcript from the NAACP Press Conference on SBAC Testing in Seattle. The segment below is by Jesse Hagopian and the rest of the full Press Conference includes additional evidence and perspectives:

[Segment starts at 7:00 Minutes from original video] …

“SBAC testing in Seattle has been an unmitigated disaster. We have had reports across this city of absolute testing meltdown in building after building. You’re going to hear today from some of the people who have experienced that first hand. They’ve experienced the technological gliches that have wasted hours of instructional time. They’ve experienced their computer labs and their libraries shut down for weeks at a time and unable to be used for research. And they’ve experienced the human disaster of labeling kids failing and seeing the impact that that has on children and crushing their spirits, turning them off to education… and you’ll hear that I think in graphic detail…”

Hagopian continued,

“…these tests are invalid… the SBAC test is invalid. That’s a bold statement. How can I make that claim? It’s not based on my own estimation. It’s based on the SmarterBalanced Consortium itself. The SmarterBalanced Consortium has acknowledged in a memo, that the test has not been proven to be externally valid. And yet our state rushed to implement this test all across the state?” See also http://sco.lt/729uev

In Maine, educators also have expressed concern regarding the validity of the SBAC exams. In Test Fatally Flawed, School Officials Say, Scott McFarland, Mount Desert Elementary School Principal posited:

“I’ve seen enough of it, seen enough glitches to know that it’s invalid data.”

From EdSource April, 2015

“Laura Bolton, a teacher at William Saroyan Elementary in Fresno, who spoke to EdSource earlier about her students’ keyboard challenges, recently gave the Smarter Balanced midterms to her 3rd-graders. She said her students struggled with the instructions and added that they were not appropriate for the age of her students.”

The article also describes high school students’ experiences with instructional and user-interface barriers: A ninth grader quoted in the article, for example said “she didn’t know how to make the online calculator work on the test.” The following excerpt from another article described that “Everyone was freaking out,” … with “students struggling to find the calculator and other function buttons on their tablets.”

An 11th grader was quoted as indicating that “There was no paper available” to work out math problems.” “We’d have to draw on the computer screen [with a stylus]. Being on a computer was distracting.”

From a Parent comment in “SBAC in Ca”:

“According to my child, there were numerous technical problems with test administration. Not much of the questions addressed things they had learned. Confusing. Bandwidth continued to be a problem along with tablets accepting answers. As parents, our district failed to notify us when testing would take place. Normally we are inundated with information about dates, times, how to feed our kids, how much sleep they needed, etc. Had I known when these tests would take place, I would have opted my child out. We get no feedback from the school, district or the state of results, which seems immensely wrong. I have to based this all on what my child told me as my school and district told me nothing.” (Emphasis added)

From a Principal/Administrator: “Accessibility in SmarterBalanced”:

“Our district recently completed the Smarter Balanced Field Test. I was very disappointed with accessibility features of the assessment. I have heard and read that the assessment has unprecedented accessibility features and provides avenues for students to participate. The accommodations and embedded features were incredibly confusing for students with disabilities and struggling learners. Students needed to click and drag or click and highlight. The use of language glossaries were found to be inaccurate on many occasions. I’m concerned about the quality checks in place for language translations and the manner in which student can locate a word to see the glossaries. Likewise, we were told that devices needed to be certified to use with the assessment. There are currently ”no” certified devices. Sad to develop a system that looks good on paper and creates a ”good story” for accessibility but falls short with real world application. To my knowledge there is no means established to get feedback on the accessibility features. That too is disappointing. How will improvements be made if there isn’t a means to solicit input from users? I fear that we will be faced with the same issues next year.” (Emphasis added)

From a Teacher who teaches Mild and Moderate Special Day classes:

“I feel it is unfair that my SDC students are being required to take grade level state tests when they are not taught or learning at that grade level. I would like my 8th grade students whom are learning 4th grade math, to take the test at the 4th grade level. There is no point in throwing complex algebra problems to students who are working on their basic math facts. This does not tell use anything about what the students know or what they are gathering from school.”

From a Teacher in LAUSD on using ipads for the SBAC testing:

“Today was the worst day I have had in a classroom for 20 years.

At my school we received the ipads last Thursday. We started testing today.

1. I have never used an ipad, and had no training whatsoever. They had them on campus for a week, yet they did not train us to use them and navigate how to help students out.

2. It took 45 minutes to get my 35 students logged in, both sessions.

3. We got kicked off during a session due to the fact that the server was busy.

4. Many of the readings were too long for the students.

5. Students had trouble highlighting text.

6. Some students could not answer questions because they could not see them on the Ipads.”

From a Media Specialist on pilot tests:

“After administering the language arts and math pilot tests for smarter balanced, teachers gave me their feedback. The tests were extremely time consuming; some students were sitting for over two hours. The structure and content of the test were not age appropriate. Teachers found the level of student frustration to be very high–students were actually angry and acting out during the testing sessions. Students were giving up on questions based on the lengthy reading passages presented–even strong readers. One teacher described the testing sessions as ‘child abuse.’” (Emphasis and links added)

From a Teacher:

“We have been teaching critical reading strategies in all subjects at my junior high. I was very surprised to not see any easily accessible tools to mark the text on the language portion of the SBAC, including underlining key words or phrases, numbering paragraphs, marking out incorrect answers, and more. If we are to test our students with screen after screen of completely filled, multiple columns of text, we should have at minimum, easy to use tools to apply these strategies.”

From an Administrator:

“I recently took the 3rd grade sample test for SBAC. I was truly horrified and felt panic-stricken for the children who may potentially interact with this type of assessment. While the content rigor was greatly increased, my biggest concern was all of the varied technology responses that a 3rd grade student would have to have mastered to be able to present the correct answer. I highly suspect that students will know answers but will get them incorrect because they don’t know how to manipulate the varying technology skills required to show the correct answer. As a highly proficient adult, I had difficulty manipulating the technology required to plot points on a graph and when I returned to the directions for guidance, the directions fell short of what I needed to know. If my prediction is correct, we will not only have poor-looking data, but will also have false data.” (Emphasis added)

***

My letter to the Board is to encourage responsible, ethical, and legal communications about the assessment data that will apparently soon be disseminated to the public. Students’ beliefs about themselves as learners will be caught up in the tangle of any explanations surrounding the assessments, and as we know, decades of research demonstrate the power of student belief to be a factor impacting subsequent effort and persistence in learning.

To be transparent to the public regarding the current status of the assessments, the Board is encouraged to consider full disclosure regarding the lack of validity of the assessments as well as the numerous complaints that have been documented regarding the problematic administrations of the tests. While this may be difficult from a public relations standpoint, to misrepresent the scores as valid or accurate would likely lead to more serious problems. If the State does plan to base policy decisions on test scores provided by the SmarterBalanced Assessment Consortium (including this year’s full field administrations as any form of “baseline” for future comparisons), again, please consider the full range of legal and ethical implications.”

[End of letter segment]

___________________________________________________________________________

[September 2015 Update]

To hear the State Board of Education discussion on the SmarterBalanced Assessments, including further questions that remain unanswered by the Executive Director of the SBAC at the September 2nd, 2015 California State Board of Education meeting, please click on the video below and view from ~1:45:00.

Please also see an Open Letter to the State Board of Education on the SBAC Test including written comments made publicly by Dr. Doug McRae, a retired test and measurement specialist who has been communicating concern about the lack of validity of the SBAC Assessments directly to the State Board of Education over the past 5 years. His September 2nd, 2015 public comment may be viewed on the video linked to the image below from 02:50:55 – 02:52:52. The Open Letter has also been translated to Spanish and is accessible here: Carta abierta a la Junta de Educación del Estado de California respecto a la publicación de calificaciones [Falsas] #SBAC.

__________________________________________________________________________

For related news on testing:

Confessions of an Assessment Field Tester // EdWeek

Superintendents and Legislators, Beware: The SBAC Tsunami is Coming//Wait,What?

SmarterBalanced Delays Spur Headaches in Wisconsin, Montana, and Elsewhere // EdWeek

More than a Dozen States Report Trouble With Computerized Common Core Tests // Washington Post

Grading the Common Core: No Teaching Experience Required // NY Times

Five Principles to Protect Student Data Privacy // Student Privacy Matters

Superintendent: Computerized Testing Gliches are Breach of Contract // Las Vegas Sun

Mayor Scott Lang [Democrat] Launches Sharp Criticisms of PARCC citing that Commissioner Chester “miscalculates the problem of unfunded liability, when kids fall off the school system and become wards of society.”

More than 90% of English Language Learners Projected to Fail Common Core SBAC Test // “Wait, What?”

###

Thanks to Anthony Cody and Julian Vasquez-Heilig for feedback and guidance that supported this work.

For more, follow EduResearcher.com and @ConnectEdProf and for current updates on issues related to testing, please visit Testing, Testing, 1,2,3…

Pingback: Common Core Teacher Day in California, Brought to you by the Gates Foundation - Living in Dialogue

This is a very important letter, and I appreciate the amount of research that went into it. I will be sharing this with other teachers and parents in California. I find it difficult to believe how complacent we have become, but as parents start to receive feedback from the SBAC tests, there will be a lot of questions about why this was allowed to happen to their children. I was outraged when I took the practice test in 2013 and wrote to my administrators about all the tech issues that came up. The answer was a lot of shoulder shrugging and hoping for the best. Parents have been kept uninformed about the lack of validity of these tests – I am encouraged by research such as yours that this will soon be upended.

Belated gratitude for this note, maestramalinche (it looks like the initial reply I had sent didn’t get posted). I have also experienced the “shoulder shrugging and hoping for the best” response after having shared concerns. My hope is that this blog and other efforts to keep parents informed will help stem the tide when results are released. Future posts will feature others in academia and research who are also speaking out about the flawed design (and hence conclusions) of the tests. More to come soon, and thanks again for your comment.

Reblogged this on VAS Blog.

Related to Q7: A district super in another state contacted FairTest. He ran data from his and other districts and found much greater correlation between SES and SBAC results than was true for previous tests. That is, SBAC would appear to be an even better predictor of family income/wealth/zip code. This will need to be investigated elsewhere (at state level preferably, and for PARCC and other exams as well) with the results shared.

Thank you for this update, Monty. I will connect with Fair Test as well as I’m interested to learn more about where these analyses were done and how they may be re-shared.

Pingback: Ed News, Friday, July 10, 2015 Edition | tigersteach

Pingback: Common Core & Chaos in Computerized Testing: Are New Assessments Valid, Reliable, Secure, Accessible, and Fair? | Cloaking Inequity

Roxana, forgive me if this info was in your post and I missed it, but do you think the width of the testing window is a significant issue. I think with the old paper and pencil tests there was a three-week testing window in California. It seems like the new window is closer to six weeks, just judging by my own circle of teaching friends around the state.

Thank you, David. Yes, the extended testing time span is integrated within the questions. My understanding from SBAC documents is that the testing window spans nearly 3 months from March to June. In my view, the security issues are exacerbated by both the extended windows and online formats. Paper-pencil may be argued to be more secure given flat/table top administrations and inability to “log” on/off of paper (test booklets were sealed, and would be sent to a centralized location where they would be scored and stored). Now, with computerized administrations, the tests are much more open to being (literally) “visible” to others and also open to potential hacking through virtual cyberspace. Logins and logouts allow access rather than hard copies (and apparently also complicate administrations with tech gliches, login problems, server interruptions, and entry errors that can lead to lost work and entire classrooms/schools needing to “re-enter” data (see 3rd video link above). As security breaches and hacking also become more commonplace, data from the exams may not be as secure as we’d like to think. Last month, the UCLA Health System encountered a massive data breach of over 4.5 million records (that were supposed to be ‘secure’). I can’t help but to wonder if the neighboring UCLA CRESST data center (where all SBAC records apparently converge from 10+million children across the 18 participating states) may also be vulnerable to a similar breach. The issues are endless and expand the more one considers the many flaws in content, development, screen interface, and related administrations of the assessments. Peter Morrison has an interesting take on issues of security and privacy suggesting that “perhaps it is not the invasion of privacy that we should be questioning, but whether high stakes tests have a place in the digital age.” http://www.forbes.com/sites/nickmorrison/2015/04/29/high-stakes-testing-and-privacy-dont-mix/

Thanks again for your comment and look forward to more.

Pingback: Open Letter to the CA State Board of Education on Release of [False] #SBAC Scores | EduResearcher

Pingback: Carta abierta a la Junta de Educación del Estado de California respecto a la publicación de calificaciones [Falsas] #SBAC | EduResearcher

Thank you, Dr Marachi. This validates concerns I heard, as a parent, in 2013 from highly experienced teachers, and justifies the wave of retirements, and resignations that occured in STEM related teachers, then for ethical concerns about these same issues. “Child abuse” summarizes our experience with middle and high school curricula and teaching methods experimentation in CA for the last ten years.

My advice to parents is two simple words: private school.

Or even simpler: Move.

Reblogged this on Exceptional Delaware and commented:

Delaware Parents: Please read this. If you had ANY doubts about the Smarter Balanced Assessment, this is the place to fill in the holes. If you think it is the greatest test since white bread, please read this. And then ask yourself: Is this really the best they can do for my child? If your answer is no, opt your kid out. Do your child a favor.

Pingback: Scientists Raise Concerns about Health Risks with EdTech. How Will The U.S. Department of Education Respond? | EduResearcher

Pingback: Emails Reveal the “Gates Machine” in Action After the Washington State Supreme Court’s Decision that Charter Schools are Unconstitutional | EduResearcher

Pingback: Reasons to Consider Opting Out of State Tests 2016 | IGNITE! … Fire is Catching

Pingback: Over 100 Education Researchers Sign Statement Calling for Moratorium on High-Stakes Testing, SBAC // California Alliance of Researchers for Equity in Education | EduResearcher

Pingback: Common Sense Questions About the Common Core Test, Specifically the SBAC | Seattle Education

Pingback: Reasons to Consider Opting Out of State Tests 2016

Pingback: Charter school advocates get down and, some say, dirty » MissionLocal